Amidst the recent pandemic, I was confronted with some works that tried to translate into sound, via a technique called sonification, data concerning the number of deaths and active cases related with Covid-19. Personally, the whole journey was proving to be deeply saddening and depressing per se, and emphasising morbid figures via sound seemed to somehow increase that feeling. Nonetheless, I still wanted to contribute to this corpus of projects, closely related to my PhD topic, which focuses on sonifying smart city data. I thus decided to address one of the so called “positive” impacts of the imposed lockdown due to the virus: mitigation of air pollution effects. Recent reports point towards a positive impact in air pollution levels due to the lockdown policies related with COVID-19. I wanted to inspect if information about such impacts could be conveyed by leveraging the audio modality. It is important to notice that causal relations between lockdown and air pollution quality are not possible to assess within the scope of this work, as it represents solely an auditory depiction of data, which values, differences and variations might have occurred due other external factors.

Specifically, this project entails an aural comparison of hourly air pollution levels (NO2 readings) on Mile End Road, London, from a week in April of 2019 and 2020, retrieved from London Air, a tool developed at King’s College London.

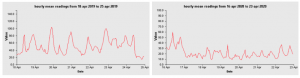

Hourly mean NO2 readings on Mile End Road from a week of April 2019 (left) and 2020 (right).

Sonification is conducted by applying a spectral delay effect to Air on the G String, Wilhelmj’s arrangement of a Bach composition. Spectral delay is achieved by a total of 10 bandpass filters with different cutoff frequencies delayed in time by different amounts and with different gains. Levels of NO2 are mapped to feedback and gain of every delay line, as well as to movement of cutoff frequencies.

Higher values of NO2 correspond to:

- Higher delay feedback for each line/filter band;

- Higher gain of delayed content for each line/filter band;

- Lower cutoff frequencies for each line/filter band;

In summary, low pollution/NO2 levels approximate the output to a “clean” rendition of the piece, whilst high pollution/NO2 levels “pollute” it with delay. Holding a button allows to switch from the 2020 scenario (no button pressed) to the 2019 scenario (button pressed and held). The whole implementation was done using the Bela board.

Further information, including code, is available here.

This work was done as a final assignment for the module Music and Audio Programming (ECS7012P), at Queen Mary University of London.

*******************

Pedro Pereira Sarmento is currently based at the Centre 4 Digital Music (C4DM), Queen Mary University of London. He is part of the CDT AIM programme, doing his PhD under the topic of Musical Smart City, in which he is studying new ways of interpreting city data through music.