On 15 July, 2025, The Creative Audio Synthesis and Interfaces Workshop was held at Queen Mary University of London, organised by AIM COMMA Lab members Jordie Shier, Haokun Tian, and Charalampos Saitis, and supported by the UKRI Centre for Doctoral Training in Artificial Intelligence and Music (AIM) at the Centre for Digital Music (C4DM).

On 15 July, 2025, The Creative Audio Synthesis and Interfaces Workshop was held at Queen Mary University of London, organised by AIM COMMA Lab members Jordie Shier, Haokun Tian, and Charalampos Saitis, and supported by the UKRI Centre for Doctoral Training in Artificial Intelligence and Music (AIM) at the Centre for Digital Music (C4DM).

This one-day workshop included a series of talks exploring the intersection of creative audio synthesis and AI-enabled synthesizer programming.

Topics included evolutionary algorithms for sound exploration, synthesizer sound matching, timbre transfer, timbre-based control, reinforcement learning, differentiable digital signal processing, representation learning, and human-machine co-creativity.

Earlier this year, the AIM CDT visited the RITMO Centre for Interdisciplinary Studies in Rhythm, Time, and Motion at the University of Oslo in Norway.

A shared interest in creative applications of audio synthesis and novel interface designs was established during this visit, motivating this follow-up workshop at QMUL.

Researchers from RITMO, The Open University, and the AIM CDT at QMUL were invited to

share their work, engage in critical discussion, and map directions for

future work. See below for a summary of talks with links to presentation recordings.

An evening concert showcased musical applications of technical implementations discussed during the workshop, grounding these discussions in real-world artistic contexts.

Invited Talks

Designing Percussive Timbre Remappings: Negotiating Audio Representations and Evolving Parameter Spaces

Facilitating serendipitous sound discoveries with simulations of open-ended evolution

Autonomous control of synthesis parameters with listening-based reinforcement learning

Can a Sound Matching Model Produce Audio Embeddings that Align with Timbre Similarity Rated by Humans?

GuitarFlow: Realistic Electric Guitar Synthesis From Tablatures via Flow Matching and Style Transfer

- Jackson Loth — Queen Mary University of London

Timbre latent space transformations for interactive musical systems oriented to timbral music-making

Why Synthesizer Parameter Estimation Is Hard and How to Make it Easy

Perceptually Aligned Deep Image Sonification

Modulation Discovery with Differentiable Digital Signal Processing

Musical Performances and Demos

Experience Replay (Performance)

- Vincenzo Madaghiele — University of Oslo

Weaving (Performance)

- Balint Laczko — University of Oslo

Phylogeny (Demo)

- Björn Thor Jónsson — University of Oslo

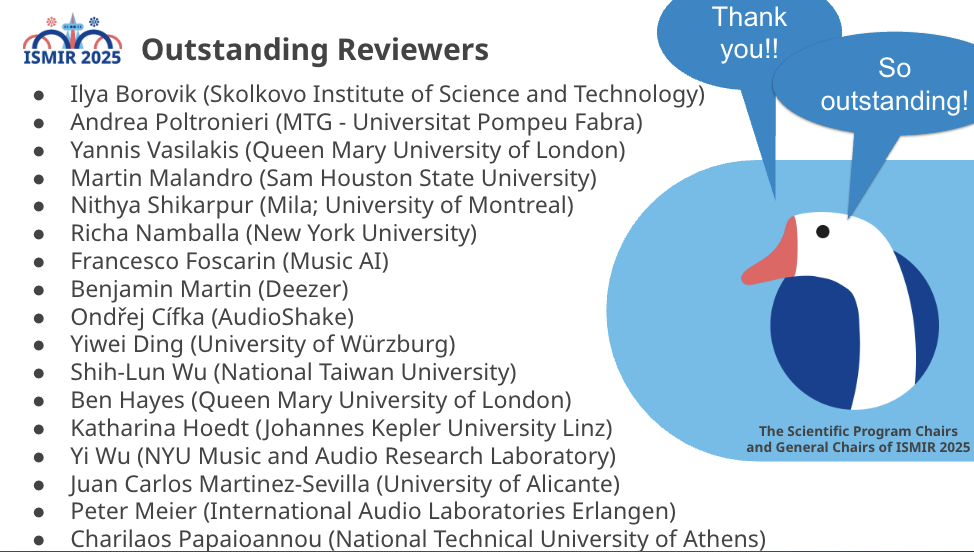

We are delighted to share that AIM PhD student Ben Hayes, along with AIM supervisors Charalampos Saitis and George Fazekas, have received the best student paper award at the ISMIR 2025 conference. The paper “Audio Synthesizer Inversion in Symmetric Parameter Spaces With Approximately Equivariant Flow Matching” proposes using permutation equivariant continuous normalizing flows to handle the ill-posed problem of audio synthesizer inversion, where multiple parameter configurations can produce identical sounds due to intrinsic symmetries in synthesizer design. By explicitly modeling these symmetries, particularly permutation invariance across repeated components like oscillators and filters, the method outperforms both regression-based approaches and symmetry-naive generative models on both synthetic tasks and a real-world synthesizer (Surge XT).

We are delighted to share that AIM PhD student Ben Hayes, along with AIM supervisors Charalampos Saitis and George Fazekas, have received the best student paper award at the ISMIR 2025 conference. The paper “Audio Synthesizer Inversion in Symmetric Parameter Spaces With Approximately Equivariant Flow Matching” proposes using permutation equivariant continuous normalizing flows to handle the ill-posed problem of audio synthesizer inversion, where multiple parameter configurations can produce identical sounds due to intrinsic symmetries in synthesizer design. By explicitly modeling these symmetries, particularly permutation invariance across repeated components like oscillators and filters, the method outperforms both regression-based approaches and symmetry-naive generative models on both synthetic tasks and a real-world synthesizer (Surge XT).

This wraps up a fantastic week at ISMIR 2025 in Daejeon, South Korea, with very strong AIM participation. Pictured are current and past C4DM members, including many AIM members.

This wraps up a fantastic week at ISMIR 2025 in Daejeon, South Korea, with very strong AIM participation. Pictured are current and past C4DM members, including many AIM members. We are delighted to share that Mary Pilataki, a PhD student at AIM, has received the Best Paper Award at the Audio Engineering Society International Conference on Artificial Intelligence and Machine Learning for Audio (AES AIMLA) 2025.

We are delighted to share that Mary Pilataki, a PhD student at AIM, has received the Best Paper Award at the Audio Engineering Society International Conference on Artificial Intelligence and Machine Learning for Audio (AES AIMLA) 2025. On 15 July, 2025, The Creative Audio Synthesis and Interfaces Workshop was held at Queen Mary University of London, organised by AIM

On 15 July, 2025, The Creative Audio Synthesis and Interfaces Workshop was held at Queen Mary University of London, organised by AIM